It’s been 5 years since I purchased the HP N40L, so it was time to upgrade to a newer, faster system. The N40L is a nice unit in a small package – although a bit slow for my needs.

Enter the Lenovo TS140 70A4003AUX. I found a deal on Newegg that I couldn’t refuse, so I immediately added it to the cart and checked out before changing my mind.

There are a few models in the TS140 series. The one I chose has the following specs:

- Intel Xeon E3-1226 v3 3.3GHz

- 4GB 1600MHz RAM

- Intel

- No HDD

- No OS

For the full spec list, search Google for Lenovo TS140 70A4003AUX.

Purpose

I purchased this server to replace my N40L, which was serving as my ESXi host. While the N40L did a great job, the 8GB RAM limit and slow CPU really limited this server to running a few VMs with light loads.

I installed ESXi 6.5 on the TS140, and plan to use it as my main (and only) ESXi host for my various testing VMs, my firewall/router, and as a testing/development environment.

Inside the Case

Immediately upon receiving it, I opened up the case to take a look inside. The cables were tidy, and it looks very clean.

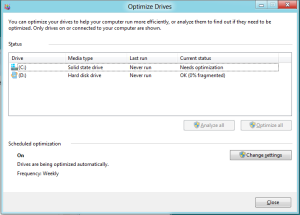

Storage

I was mostly interested in an internal USB port for ESXi, and was disappointed to learn that this server does not have one. No problem, I’ll just use an external one.

There are 2 trays for 3.5″ hard drives, which can also be used for 2.5″ drives like SSDs with the addition of an SSD bracket. This is what I did, and I installed a spare 64GB SSD that I had. I will probably upgrade this to a larger SSD if necessary, but I think this should suffice for now.

The server also has an optical drive bay, which can be removed and in its place, a 2.5″ SSD or HDD bay can be installed. I may also go this route if I need the extra storage, but there’s no need for now, since all my storage is on a LUN on my Synology DS1512+.

Networking

There is only one network port, and this is shared with the Intel AMT for remotely accessing and managing the server. I installed a 4-port Intel i350-T4 NIC that I had purchased for the N40L, so that takes care of the networking.

RAM

There’s not much that can be done with only 4GB of RAM these days, especially if the server is going to be used as a virtualization host. This server uses DDR3 ECC RAM. Since I was replacing the N40L, I removed the 8GB RAM that it had and installed it on the TS140, which is now running 12GB RAM on 3 slots.

This is not the best solution, however. The server maxes out at 32GB RAM and it has 4 slots total, so I’ll be replacing the RAM with some 8GB chips in the near future.

Power Supply

The power supply seems to use some special proprietary connector, instead of the regular ATX connectors that we commonly see on PSUs and motherboards. This worries me a bit, but I knew this in advance, so I’m going to live with it. The power supply is a fixed 285 watt Bronze – enough for my needs.

Cooling

The server came with plenty of low-noise fans – all covered with grills. The system is pretty quiet and stays cool.

Power Consumption

This is another big reason why I wanted to upgrade the older server and I refused to use my old PC as a server. The TS140 sips power, and sits at around 25 watt on idle after ESXi has loaded. I never saw my power meter go higher than 50 watt. Now, keep in mind that this is without mechanical drives and only 1 SSD – I’m sure the power consumption will be higher after adding a few mechanical drives.

Conclusion

I’m very happy with the Lenovo TS140 so far. It is a huge upgrade over my previous server, and it serves my needs very well for now.

Having a server-grade CPU and a motherboard with remote out of band capabilities make this server well worth it.